Discover LLMO, the evolution of SEO, and navigate the four horizons to future-proof your brand in the AI era. Learn to optimise for AI models, voice assistants, and agents.

Organic traffic is vanishing and not just because of algorithm updates.

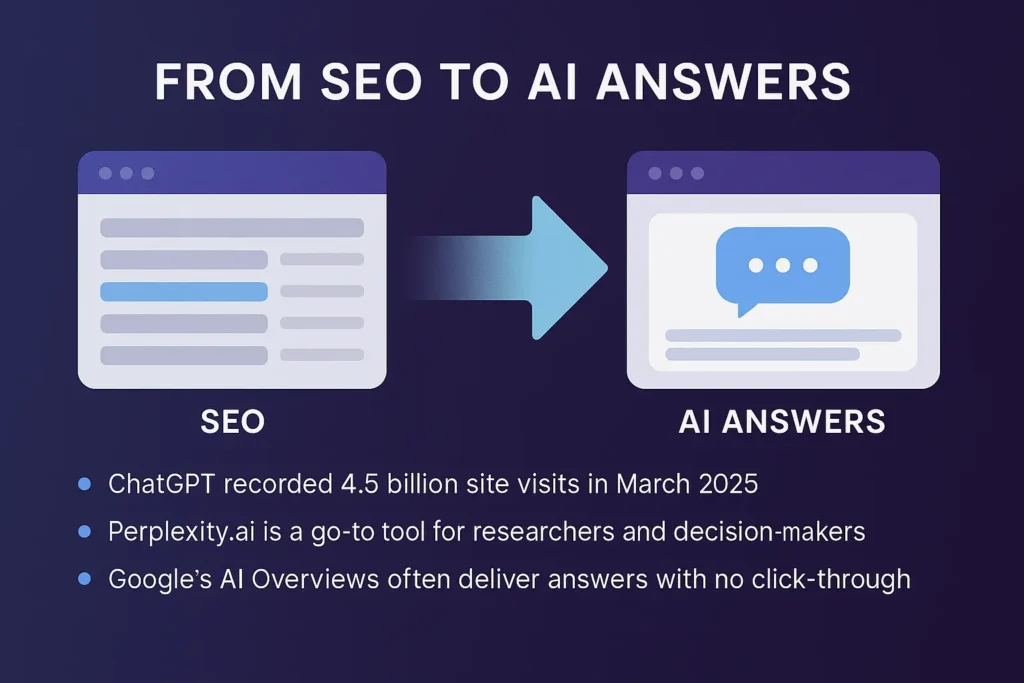

A study from Ahrefs shows that when Google AI Overviews pop up in search results, the top-ranking pages get about 34.5% fewer clicks than if those AI Overviews weren’t there.

AI answer engines like ChatGPT, Gemini, and Perplexity are reshaping how users discover information. As summarisation replaces search and AI citations outrank backlinks, brands are facing a new visibility cliff. If your Google traffic dropped recently, it may not be an SEO issue at all.

But there may be a way out, after all.

As answer engines like ChatGPT, Gemini, and Perplexity redefine discoverability, replacing traditional search interfaces, brands must shift from chasing links to earning mentions, structuring data, and aligning with AI-native UX. LLMO ensures your brand content is not just found, but featured.

This guide introduces the four horizons of LLMO: a maturity model for staying visible in a zero-click, AI-curated ecosystem. It’s designed for marketers and strategists building visibility in a post-search era.

Enter: Large Language Model Optimisation (LLMO). It’s not a replacement for SEO, but a critical evolution that ensures your content remains:

- Findable

- Citable

- Chosen

…By AI systems that now shape the user journey.

In this guide, we’ll break down the key differences between SEO and LLMO, explain how AI systems retrieve and rank content, and show what to optimise when AI disrupts your traffic.

What’s Changing: From Blue Links to Answer Boxes

Search is shifting from lists you click to answers you receive.

Traditional SEO was built on crawling, indexing and ranking blue links, but AI interfaces have rewritten the flow. Instead of showing ten links, systems like ChatGPT, Gemini and Perplexity deliver summarised answers that blend retrieval, reasoning and citation.

AI models no longer point users to a page; they pull the answer from the page. Visibility depends on how clearly your content can be parsed, summarised and cited.

Here is what’s driving the shift:

- ChatGPT recorded 4.5 billion site visits in March 2025, many driven by its integrated browsing and plugin experiences.

- Perplexity.ai, known for its real-time citation capabilities, is now a go-to tool for researchers and decision-makers.

As Rand Fishkin, co-founder of SparkToro, notes: “The currency of LLMs is not links, it’s mentions.” In other words, if you’re not being cited across trusted sources, you’re invisible in AI answers.

According to internal tests by LangSync, a world-leading LLMO agency, using PromptLayer and Langfuse, brand citations in ChatGPT-4o increased by over 30% when FAQ schema and structured author bios were added. This proves that formatting for answerability is as crucial as content quality.

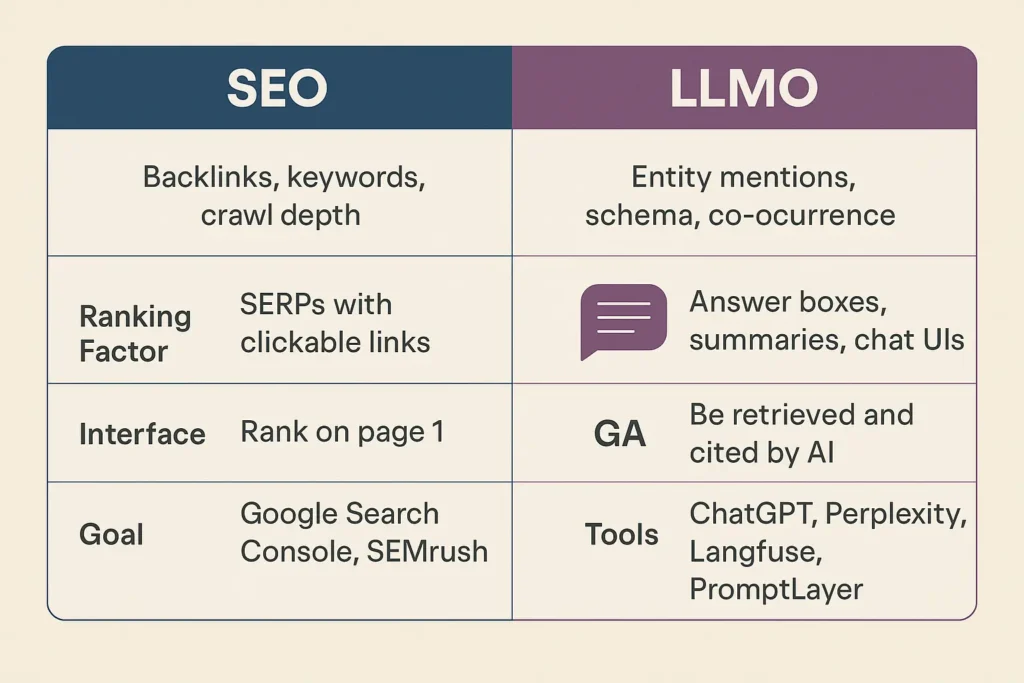

SEO vs. LLMO: Key Differences

| Feature | SEO (Search Engine Optimisation) | LLMO (Large Language Model Optimisation) |

| Ranking Factor | Backlinks, keywords, crawl depth | Entity mentions, schema, co-occurrence |

| Interface | SERPs with clickable links | Answer boxes, summaries, chat UIs |

| Goal | Rank on page 1 | Be retrieved and cited by AI |

| Tools | Google Search Console, SEMrush | ChatGPT, Perplexity, Langfuse, PromptLayer |

How AI Models Decide What to Cite

AI systems do not rank pages like a search engine.

They retrieve, interpret and compress information into answer blocks. To decide what to cite, models rely on a mix of entity clarity, schema signals and how your content is structured.

AI models cite content that is easy to parse, rich in entities, and formatted into clear, answer-ready blocks. Schema, author signals and structured metadata increase citation likelihood.

Here are the core signals that matter:

1. Entity Mentions Over Backlinks:

Models look for brands, people, tools and topics that appear consistently across trusted sources.

If your brand is mentioned in podcasts, interviews, reports and third-party content, it becomes a stronger retrieval candidate.

2. Structured Data Everywhere:

FAQPage, Article, Author and HowTo schema help AI systems understand roles, claims and context.

Clear author attribution, updated timestamps and content signatures improve trust signals.

3. Prompt-Tested Content:

AI models prefer text that looks like it belongs in an answer box.

Short definitions, bullet points, numbered steps and 30 to 50 word summaries are far more likely to be quoted.

4. Answer-Ready Formatting:

Headings, subheadings, and tight sections help models navigate your content.

When your page forms clean clusters, it becomes easier for AI to extract accurate fragments.

5. API and Agent Compatibility:

Mentions across news, opinion sites, research blogs, academic pieces and social clips help reinforce authority.

Backlinks matter less than contextual references.

Pro Tip: Use Langfuse to map co-occurrence density across prompts, like analysing which brand or product terms co-occur most frequently in LLM outputs. And PromptLayer to simulate zero-click prompt paths. This lets you understand how LLMs chain context, ensuring your content surfaces across varied user queries.

Here’s how:

Export logs from AI prompt tests and apply Langfuse’s co-reference visualisation to identify high-impact entity associations. Then, use PromptLayer to simulate user queries that don’t require clicks (e.g., “best productivity software for remote teams”) to trace how LLMs chain information.

If your brand doesn’t appear or gets cited without core context, optimise content to tighten those entity relationships.

Combining Langfuse’s prompt log insights with PromptLayer’s chain modelling can help you reverse-engineer how content spreads across AI conversations and then reinforce those pathways with targeted schema and structured data.

Case in Point: Google’s AI Overviews.

LLMO SEO best practices for 2025

These are the core LLMO SEO practices brands should focus on in 2025 to stay visible in AI answers, summaries, voice responses, and zero-click environments.

- Strengthen entity mentions across trusted, third-party sources

- Upgrade your schema coverage (Article, Author, FAQ, HowTo)

- Break content into answer-ready blocks of 30 to 50 words

- Test prompts monthly to track brand visibility in ChatGPT, Gemini, and Perplexity

- Add API friendly metadata for agents and AI tools

- Monitor co-occurrence patterns using Langfuse and PromptLayer

These practices connect classic SEO and LLMO, giving you coverage across both search results and AI generated answers.

Mainstream media is taking notice, too.

A recent article from the New York Post reported that publishers are seeing traffic declines due to Google’s AI-generated summaries, with some media executives warning that AI Overviews could “devastate” ad revenue by reducing clicks and time-on-site sources.

A study by Semrush and Datos found that over 88.1% of informational queries on Google now trigger AI Overviews Sites not structured for summarisation or schema-rich delivery are effectively skipped.

To stay visible:

- Use FAQPage, SpeakableSpecification, and HowTo schema types

- Ensure clear author attribution and content signatures

- Align metadata with what AI parses as helpful, trustworthy, and experience-based

Google AI Overview inclusion is highly correlated with schema-marked definitions and answer fragments (30–50 words in length). Short, authoritative answers outperform long-form explanations.

LLMO + SEO = Future-Proof Visibility

You don’t need to abandon SEO. But without LLMO, you’re not competing in the spaces where users are discovering answers. Combining both strategies ensures you’re present in:

- Classic search listings

- AI-generated answers

- Voice queries

- Autonomous agent transactions

Next Steps: Run an LLMO Visibility Audit

Follow these steps to run a quick LLMO visibility audit for your brand:

- Prompt ChatGPT, Gemini, Claude: What happens when you prompt them with buyer-aligned queries?

- Check Citations: Who do they quote, link to, or summarise?

- Reverse-Engineer Your Presence: Use schema, content restructuring, and earned media to increase your retrieval score.

Feed your most important pages into embedding-friendly platforms like Weaviate or Supabase Vector Store to increase vector retrievability for private LLMs.

How to Implement It:

- Chunk and Clean Content: Break each key page into semantic blocks (100–300 words each). Remove HTML clutter; keep plain, informative text.

- Generate Embeddings: Use OpenAI’s text-embedding-3-small or Cohere’s embedding models. Submit each chunk to the embedding API to get vector representations.

- Choose a Vector Store: Use Weaviate for enterprise needs and Supabase for lightweight projects.

- Store with Metadata: Save embeddings with labels (title, URL, section summary) for retrieval.

- Enable Vector Search: Use cosine similarity to retrieve the most relevant chunk in response to queries.

- Monitor & Iterate: Update your stored chunks when content changes. Track vector hits using Langfuse or OpenLLMetry.

FAQs

What is the main difference between SEO and LLMO?

How can I tell if my brand is visible in AI answers?

What tools help with LLMO optimisation?

Does Google still matter if AI engines dominate visibility?

LLMO vs SEO: Final Thought

Traffic hasn’t disappeared. It’s moved into AI layers where mentions, metadata, and meaning win. LLMO is how you follow it. Action the tips here to start surfacing in AI answers.

Or, reach out to us at LangSync for a custom LLMO visibility roadmap.